What's difficult parsing resumes – Part I: Converting PDFs into readable text

A deep dive into the challenges of parsing PDF resumes, starting with text extraction.

What's difficult parsing resumes – Part I: Converting PDFs into readable text

Welcome to a new series of blog posts describing the challenges of parsing CVs accurately.

Reading text from a PDF is not as simple as you think

CVs are most often shared as PDF files. PDF is a non-plaintext file format which is primarily used to represent print documents. It is not as easily readable by a machine as for example a text file or an HTML file.

In order to parse a CV, you need to read its contents. And to make any sense of them, they should be read like a human would; meaning, consecutive words should be combined into lines of text, stacked lines of text into paragraphs, and so on. This is not the case in PDF by default.

You can use a software library to read a PDF file (for example, for JavaScript there is PDF.js by Mozilla and for C++ there are XpdfReader libraries available under GPL or commercial license).

When you do that, you'll notice that the pieces of text contained within the PDF are not always logically grouped, and sometimes they are not even complete words:

Example from PDF.js viewer. Notice how fragmented the raw text nodes are.

So you'll need to make sense of them somehow. More on that in a bit.

Extracting text styles from a PDF is not as simple as you think

Not only is the text content difficult to read, but also its styles are.

In CSS you could look at the color-property of a node to determine its text color. In PDFs, there is no such thing.

What color the text is printed in is determined by all kinds of graphics rules and filters.

Essentially the only way to reliably get the color of a piece of text is to first render it into a bitmap with a proper PDF renderer and then reading the pixel values from the bitmap (e.g. see this StackOverflow answer).

Text styles like underline are also not simply extractable. Usually in PDFs the line under the text is a literal "draw a line from point A to point B" graphics command. You'll need to analyze these lines in the PDF structure if you want to know what text is underlined.

Nor is font-size or line-height. You need to estimate these from the height of the text node.

You need to figure out the text layout yourself

Okay, now you've converted the PDF file into text nodes.

When you do that, you'll notice that the pieces of text contained within the PDF are not always logically grouped, and sometimes they are not even complete words. You need to look at their coordinates and combine them into lines of text yourself.

So you'll have e.g. these two text nodes…

- "Software" at x=90 y=40

- "Developer" at x=112 y=40

…and your algorithm needs to take into account their width, font-size (based on visual height), and decide to combine them into one line of text.

If you do that successfully, you'll now have a list of text lines that may look like this:

"Software Developer"

"April 2023 –"

"Present"

"Built and managed internal tooling and public-facing"

"web-based products in an agile environment."

You have some more combining to do to turn these into logical paragraphs. Otherwise you won't be able to parse the 3 data points from this in a well-formatted manner.

Again, using the text node coordinates, font-size, color, and so on you can make your best guess how to combine them. It is not always very easy.

If you manage to do it, you'll now have 3 well-formatted paragraphs:

"Software Developer"

"April 2023 – Present"

"Built and managed internal tooling and public-facing web-based products in an agile environment."

And you can move on to parse data points out of them!

Don't forget about paging

An additional challenge comes from the fact that PDFs are layouted in paper pages. Quite often a text paragraph or a section of the resume is split in half and continued on the next page.

Example of a PDF with a section split into multiple pages and a "Page X of Y" in-between.

Your algorithm will need to understand these kinds of vertical gaps in the text node positions in order to combine text nodes successfully.

Make sure to also remove footers like "Page X of N" from in-between the real content!

And don't forget about columns

Honestly, all of the above is still achievable with relatively simple algorithms.

Basically you'll need a way to parse the text nodes, and then you can sort them left-to-right, top-to-bottom, and combine horizontally and vertically based on some threshold (e.g. vertically space between text nodes is less than the expected width of a space in that font-size, and horizontally the whitespace between adjacent lines of text is less than their estimated line-height).

But, resumes are of course not always in a single-column layout, which makes things much more difficult.

If a page is fully split into columns, writing an algorithm to detect it is quite simple:

Example of a page with a clear 2-column layout.

But often you'll have varying columns in one page, e.g. the top of the page may contain a full-width personal summary, and below it there can be work experience organized into a more tabular structure:

Example of a PDF with varying column structures and tabularly organized text.

If your algorithm is able to resolve column layouts, your data points will be usable:

"Software Developer

Acme Corp."

"Full-Stack Engineer

Example Company"

If it isn't, you'll end up with messy data:

"Software Developer Full-Stack Engineer"

"Acme Corp. Example Company"

Broken text nodes

Because PDFs are not meant for being parsed as plaintext, programs that export PDFs don't always include all the necessary information that would be needed to export the text.

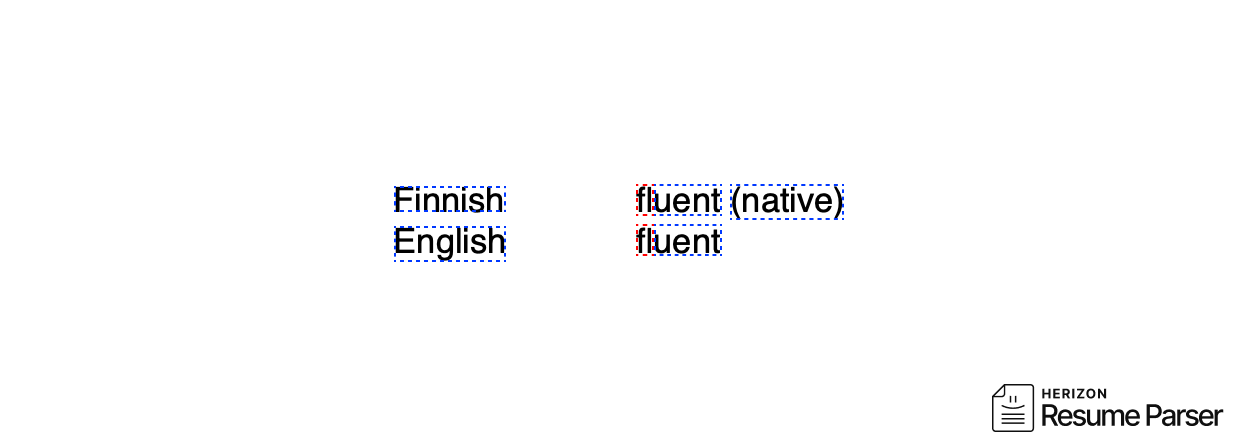

For example, in this PDF the word "fluent" has been split into "fl" and "uent", where "fl" is a graphical element while "uent" is a regular text node:

Example of a word being split into graphical and text nodes by the program that created the PDF.

The same thing happened in that PDF for the word "profitable". It was split into "pro" (text), "fi" (graphic), "table" (text). Both "pro" and "table" are valid words, so an algorithm that knows about dictionary words wouldn't be able to reliably combine them back in this case.

This is an exceptional case for an OCR (Optical Character Recognition) algorithm, which may be able to read the word as intended, unlike raw PDF text reading algorithms.

Conclusion

It really can be much more difficult than anticipated to extract text contents out of a PDF file.

However, it is essential if you wish to extract structured data from the file.

Want to try our parser?

Get in touch to learn how we can help with your resume parsing needs.

Contact us